AURA

The future in Music Curation

Research Presentation at Parsons School of Design, May 11, 2017.

AURA is a speculative interaction design that explores the future of listening to music. This design integrates electroencephalography (EEG) sensors that measure the user’s brainwave activity to generate their personal music playlist. AURA is the antecedent to the current market of streaming services, such as Apple Music and Spotify. This exploration ties the emerging market of machine learning, and the transition of the future of the internet of things into the brain of things as the next innovation in biosensing technology. The goal is for the user to experience a generative playlist without physically making choices.

“The internet’s completely over. The internet’s like MTV. At one time MTV was hip and suddenly it became outdated. Anyway, all these computers and digital gadgets are no good.” — Prince

User Narrative

Preface

It is the year 2037, and so much has changed since the beginning of the twenty-first century. Self-driving cars have become the norm, people have adapted from mobile and tablet devices to screen-less interactions, and many of our electronic devices have transitioned from the internet of things to brain-controlled objects. The World Economic Review back in 2016 has predicted that more of our everyday items will be operated by brain activity by 2045 and that has started to become a reality. People have transitioned from manually streaming music from their desktop or mobile device and are now listening by generating their music playlists from their own brainwave data.

User Persona

The ideal user will experience this product most likely in a solitary listening environment. They are young post-millennials who come from families that are able to afford the latest technologies in their home. They are also young professionals who devote time to themselves to relax, exercise, or other activities that keep them engaged in the focus of their own environment. Another ideal user is also from the Generation X, Generation Y/Millennial eras who have experienced the evolution of music technology products from the time they used to purchase full albums to individual tracks. This particular user is the type who likes to keep up with emerging technology. Most importantly, these users are passionate about music, their favorite artists, and have the curiosity of discovering what other music is out there for them to enjoy.

User Interaction

In an environment where electronic devices have gone screen-less and integrated into our bodies, AURA is an embedded chip placed inside each ear that wirelessly connects to the user’s alpha brainwaves in the back of their head. This EEG reading technology analyzes the user’s data based on their attentive and/or meditative states to determine the perfect music playlist. For example, if the user is in an environment where they are relaxed and their preferred genre is rock, AURA will coordinate those preferences in that category for the individual as they continue in the relaxed state. AURA also understands moments where the user’s attention is required and adjusts the volume, based on the environment. Music can also change based on the user’s mood and emotions. AURA will develop over time to learn more about the individual’s taste, playing the music they want in the appropriate moments in real time.

Process

Initial Sketches

The ideation of the product started with a sketched out scenario and interaction of how the user interacts while listening to music. The first initial sketch developed from a different concept which evolved into the current iterations. In Sketch Prototype 1, the user is wearing a EEG biosensor helmet that is controlling a sound output from a desktop platform.

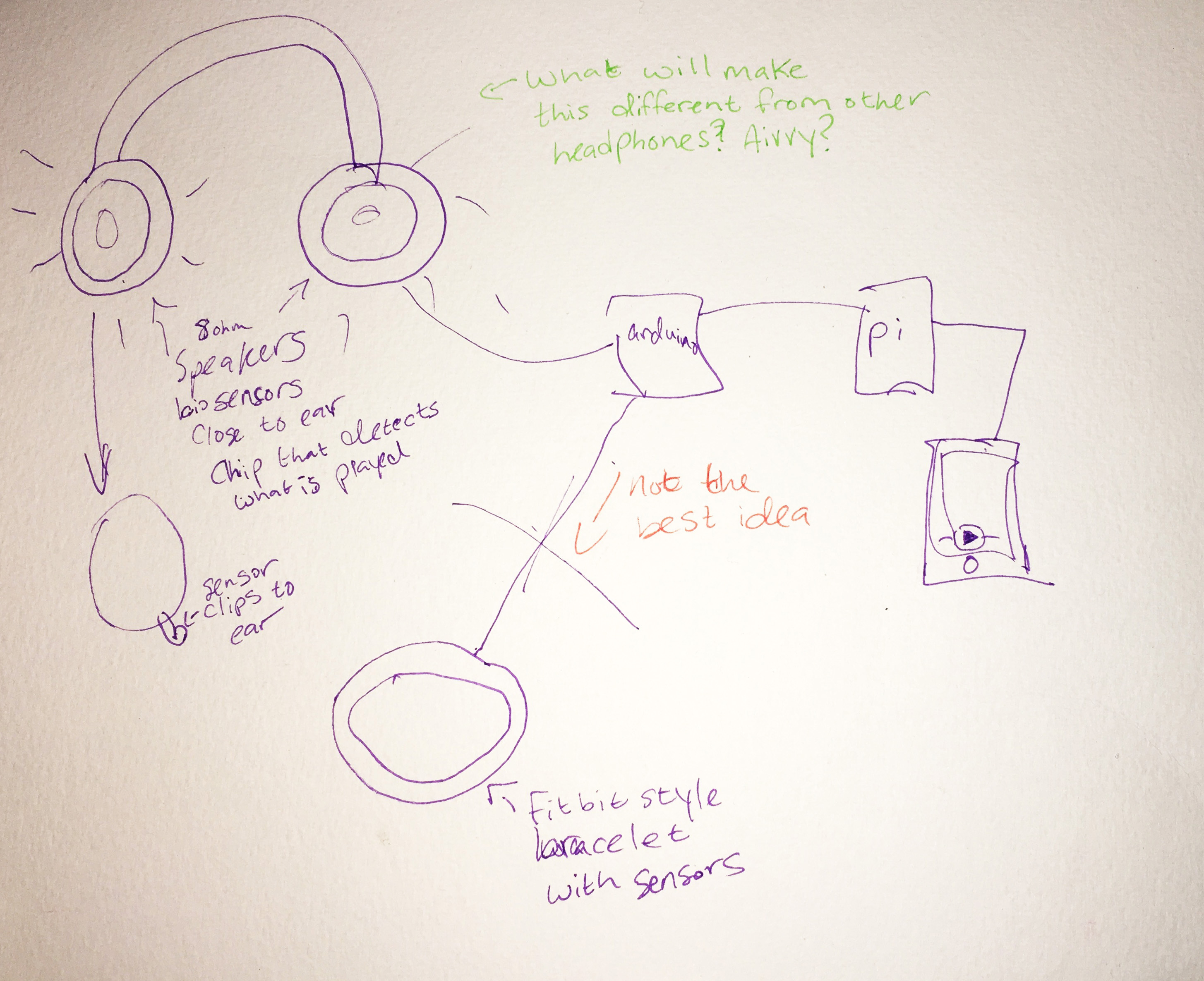

Sketch Prototype 2 dives into the features of the designed product. Design Questions are surfaced to determine the positioning of the potential wearable device and how it will serve its purpose. Different technologies in hardware are also involved in the sketch to determine the possible ecosystem.

Usability and Functionality

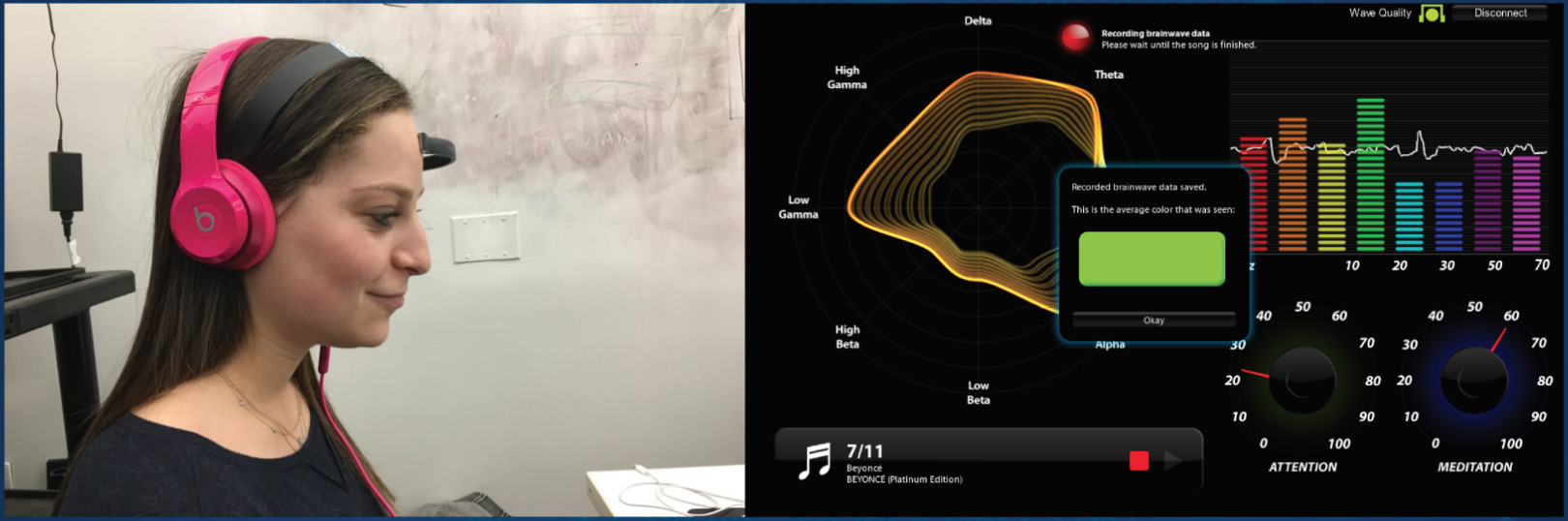

The user testing prototypes incorporated biosensing technology. Usability Prototype 1 used the Neurosky Mindwave that the user tester wore while listening to music. The Neurosky included an application that displayed an analysis of the user’s brain activity based on the song they were listening to. This prototype showed significant difference in data when the user was listening to music they liked versus music they disliked.

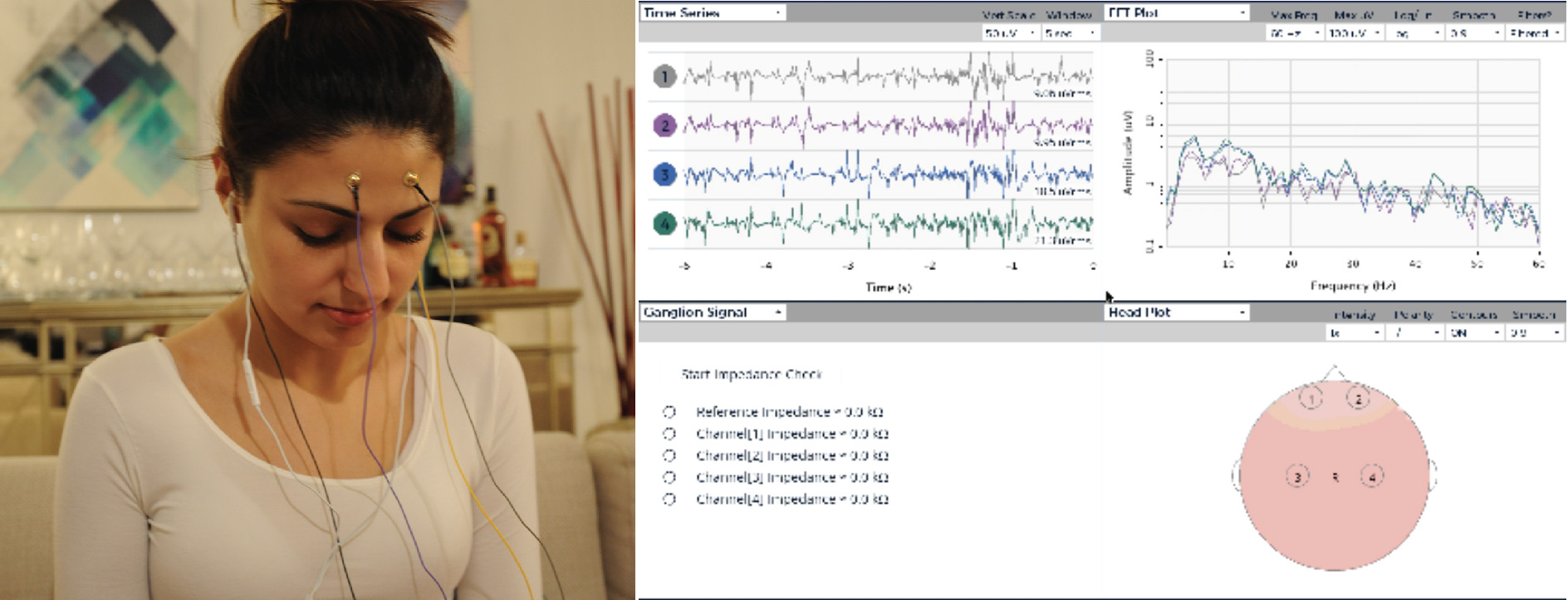

Usability Prototype 2 incorporated the OpenBCI technology. This allowed for much more accurate data, displayed which sections of the brain were active, and allowed for the analysis of alpha brain waves. Upon observing the data, user testers averaged in a meditative state while listening to a song that they loved. When listening to a song they were not familiar with, average levels in attention were dominant. However, when a song that they did not like was played, data appeared to be scattered and inconclusive.