Polyphonic Synergy

Polyphonic Synergy is an interactive music installation that allows the user to express group creativity through music.

Polyphonic Synergy is a musical instrument that is designed for multiple user interaction. The interface is a flat surface with hidden sensor points that will activate sound and visuals by the user’s hand movements. The users are facing each other with a thin wall in between preventing them from seeing the other person. The purpose of this interaction is for the users to work together to play by ear instead of sight. The ideal target audience will be music lovers and those who want to embrace their creativity. I am also striving to create a multi-user environment to create a shared experience.

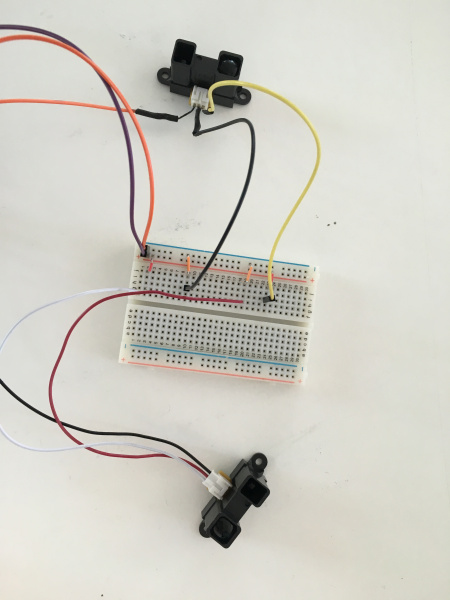

The build incorporates an arduino for sensor and motion activity and Max MSP for generating sounds. The arduino uses the analog signal reader to determine values detected from two individual Sharp infrared sensors. The serial port from the Arduino is transposed to Max MSP, where the sound files are generated. In Max MSP, each sound clip is grouped with an if statement that will trigger a sound when the sensor detects its corresponding range.

Design Process

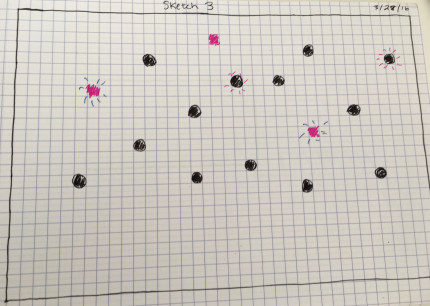

I first sketched a general idea and plans of what I would like to create. These series of sketches gave me an idea of what I wanted to design and what may not be able to work.

Next, I researched what sort of technology would be the best option to achieve my goals and provide the best outcome. I researched leap motion as a touch-free option that will still require interaction with hands, and for its powerful capabilities. However, because this project is meant for multiple users, leap motion would not provide group interactivity and has a limited range. To create my sound clips and how they will be activated, I will be using Max MSP. This tool is beneficial with sound and works well with sequencing.

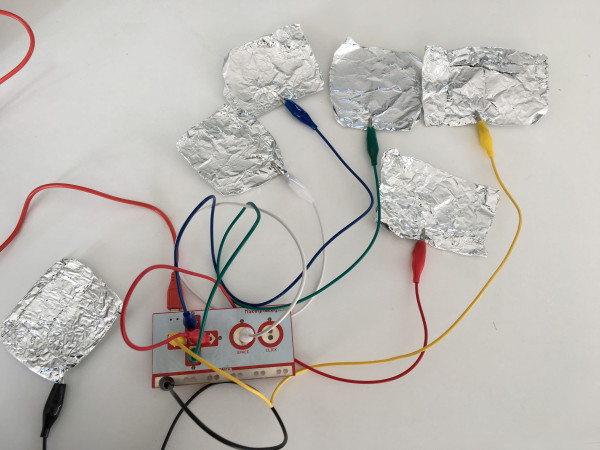

To experiment with touch activation, I started with a Makey Makey as my first prototype to get an idea of what my interface should feel like and test the physicality of the project, along with Max to generate sound. This tool was very helpful in the design process, because it helped me understand a multiple user interface. As a result, I have moved on from the Makey Makey to work with more advanced technology.

The next prototype I incorporated the Arduino and two Sharp infrared proximity sensors along with Max to better facilitate multiple user interaction. The sensors work with hand movement based on distance, where I have set different sound clips to play depending on the position of the user. I have tested the numerical values in the Arduino serial monitor and the Max console to determine the working range for the sensor interaction and how to program the sound clips. The sensors are placed onto opposite sides of a piece of cardboard to engage the user to face each other while performing.

At this stage in prototyping, I am using preexisting sound clips found on freesound.org. These sound clips include melodic, bright music and simple percussion beats with the goal of incorporating them together. In the next stage, I plan to include individual music pitches to allow more opportunities for creativity.